China's Compute Bet: Can Efficiency Replace Scale?

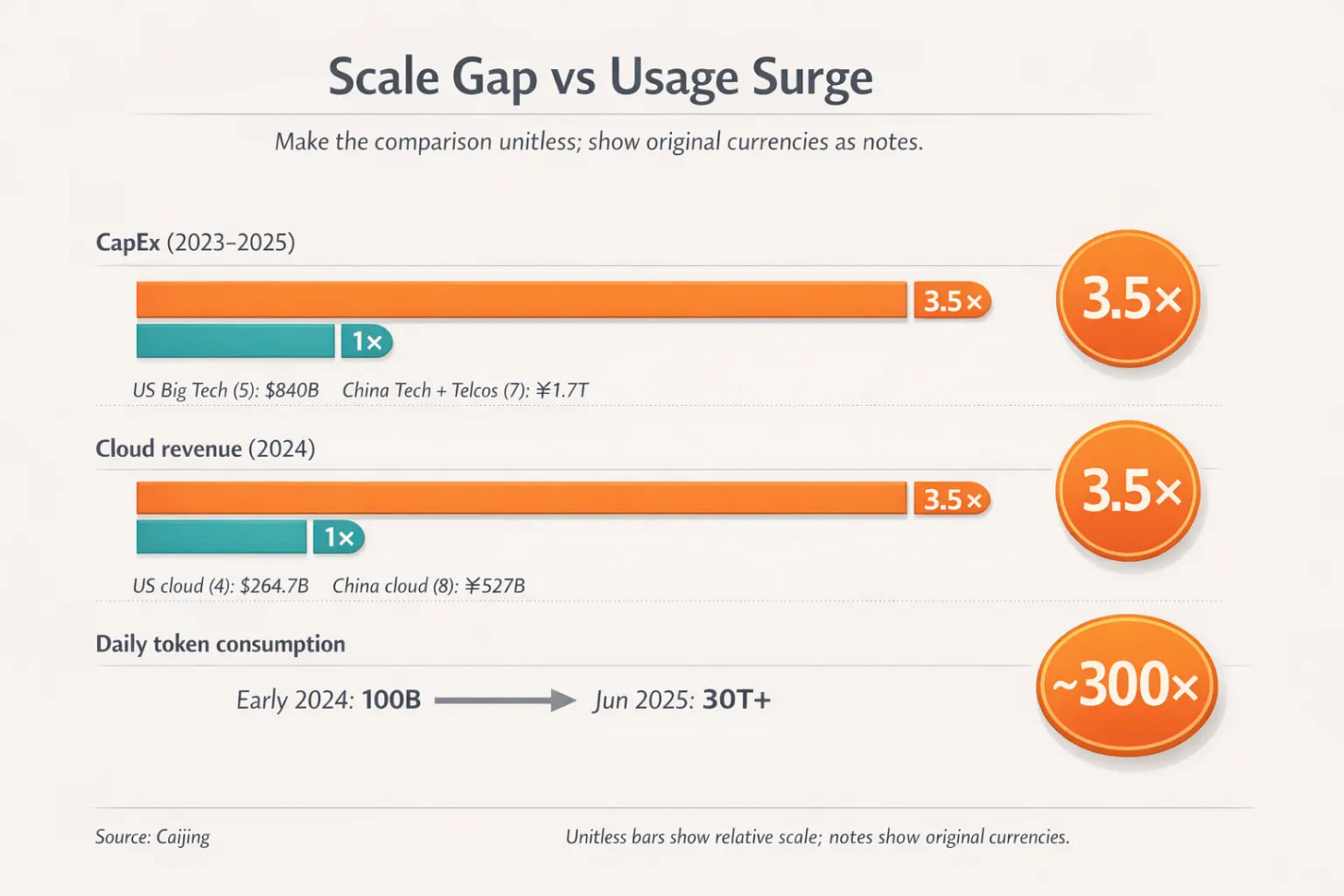

China trails US compute spending 3.5x. Token consumption grew 300x. How efficiency is bridging the gap — and where it breaks down.

AMD’s stock plunged 17% on February 4. The company beat fourth-quarter revenue estimates by $600 million. First-quarter guidance came in above consensus. Yet investors sold anyway.

The reason? Expectations were sky high. More tellingly, AMD’s fourth-quarter beat relied heavily on $390 million in AI chip sales to China — revenue that was not in Street numbers and caught analysts by surprise. Without those China sales, the data center business would have missed estimates entirely.

The China factor matters more than Wall Street expected. This points to a larger story about how China is navigating compute constraints.

China’s daily token consumption grew from 100 billion in early 2024 to over 30 trillion by mid-2025. That represents a 300-fold increase in eighteen months.

This growth rate demands explanation. China trails the United States significantly in compute infrastructure investment. Between 2023 and 2025, five American tech giants (Microsoft, Amazon, Google, Oracle, and Meta) spent approximately $840 billion in capital expenditure. During the same period, China’s seven major tech companies and telecom operators invested roughly ¥1.7 trillion, about $240 billion at approximately 7:1 exchange rate.

The ratio stands at 3.5 to 1 in America’s favor. Cloud revenue shows a similar gap.

So how does a country with one-third the investment generate 300x growth in AI usage?

The answer lies in a system-level approach connecting four elements: squeezing maximum output from every chip through algorithm and engineering optimization, leveraging lower energy costs in western regions, embedding AI into manufacturing hardware rather than selling it as software, and feeding the resulting demand back into domestic chip adoption. Efficiency gains lower costs. Lower costs drive adoption. Higher adoption justifies further investment in domestic capabilities.

This model works better for inference and deployment than for frontier model training. Understanding where it applies and where it breaks down matters for anyone making investment decisions in China’s AI sector.

The Efficiency Imperative

When capital scale cannot match competitors, output per unit of capital becomes the defining metric. Chinese companies have turned this constraint into a capability.

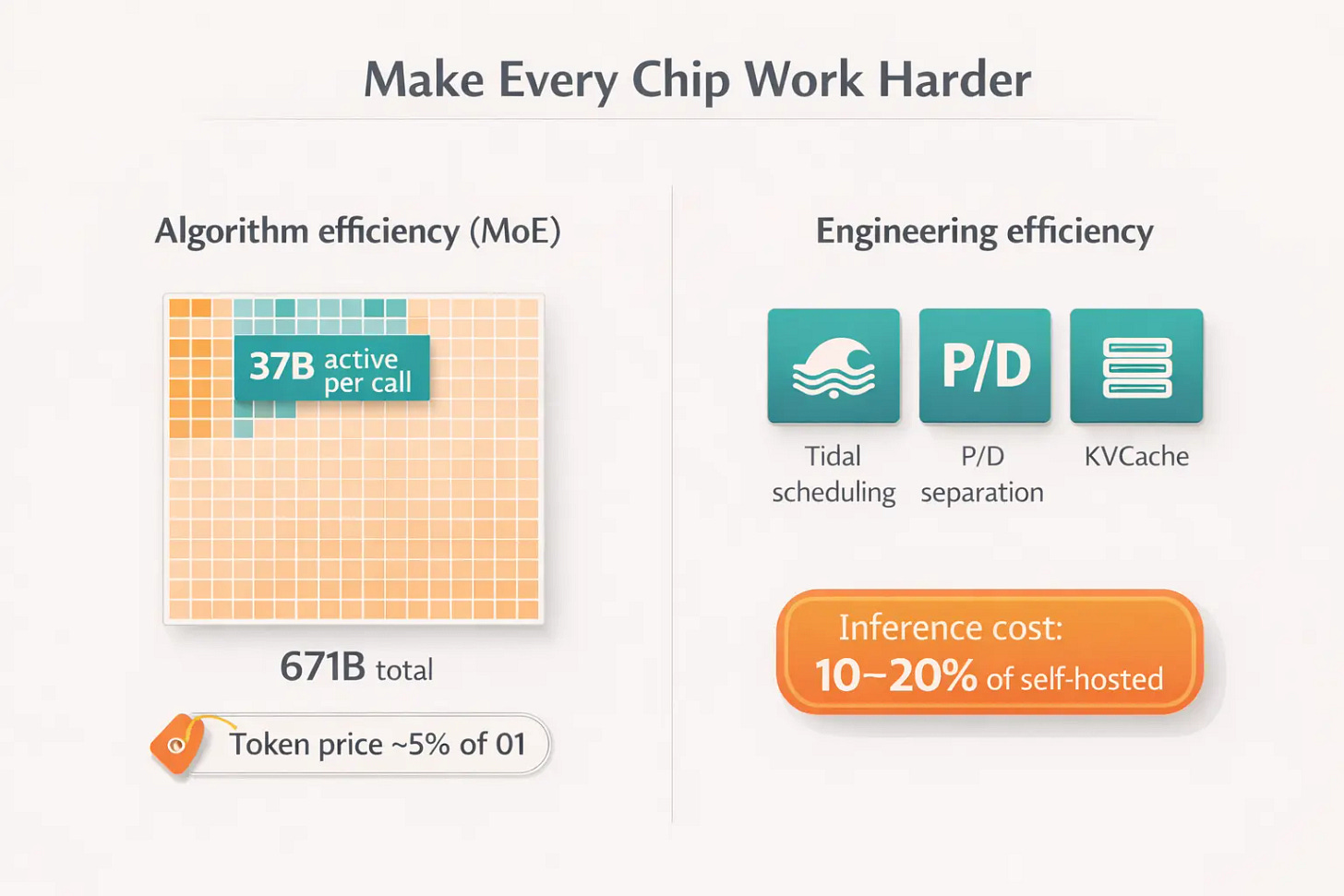

DeepSeek’s R1 model exemplifies the algorithmic approach. Using a Mixture of Experts architecture, the model activates only 37 billion parameters from its total 671 billion parameter base during each inference call. Different expert modules run on different chips in parallel. This design reduces single-chip workload while improving overall utilization. The result: DeepSeek’s token pricing came in at roughly 5% of OpenAI’s o1 model.

ByteDance’s Volcano Engine pursues engineering optimization. Through techniques including tidal scheduling (spreading compute loads across off-peak hours), separating compute-intensive and bandwidth-intensive tasks onto appropriate chips, and caching context to avoid redundant calculations, the company claims it can deliver cloud-based inference at 10% to 20% of self-hosted data center costs.

These efficiency gains create space for domestic chips to compete on total cost of ownership rather than raw performance.

Domestic Chips Cross the Usability Threshold

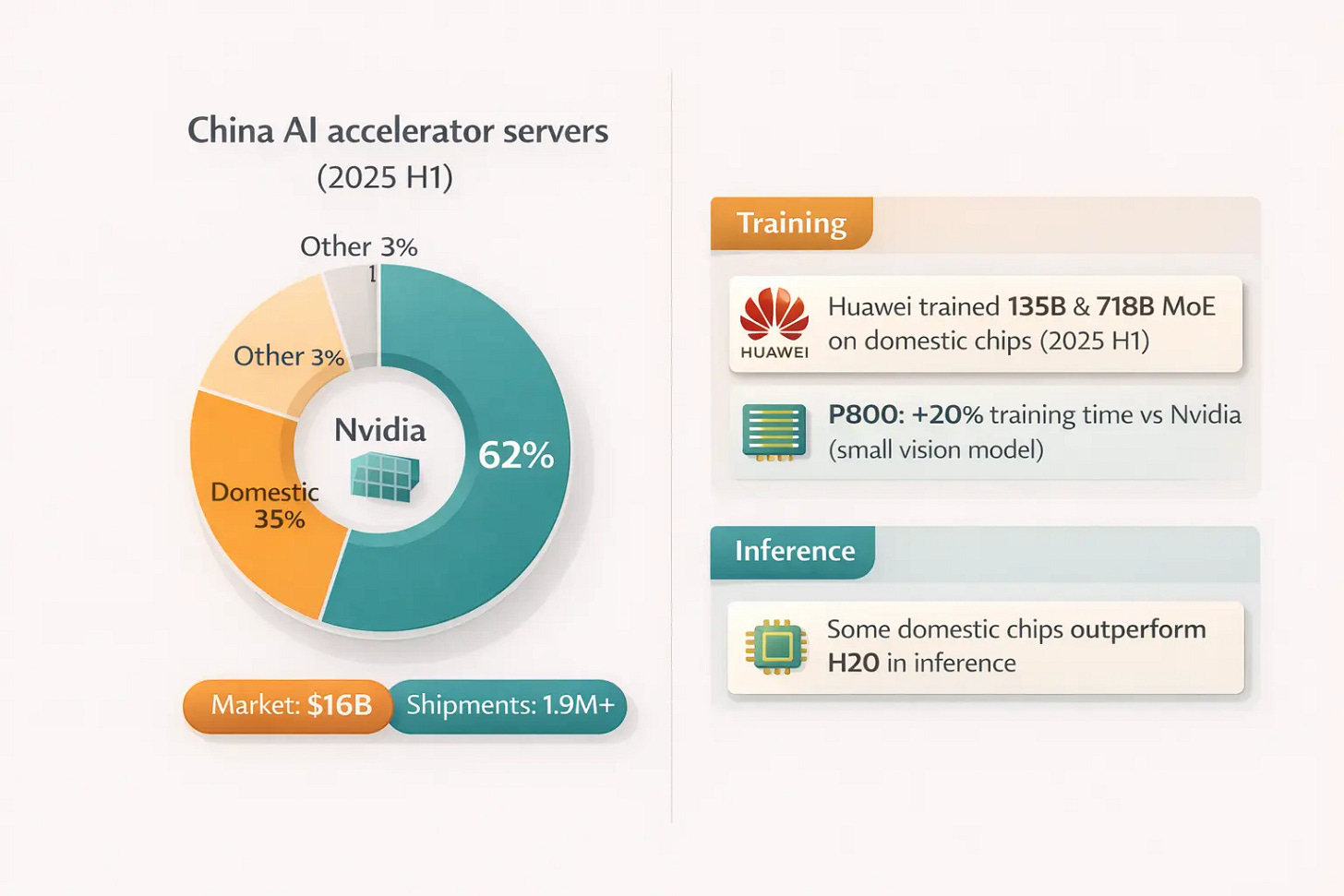

The Chinese AI accelerator server market reached $16 billion in the first half of 2025, more than doubling from the previous year. Total accelerator chip shipments exceeded 1.9 million units.

NVIDIA still commands 62% market share. Domestic chips now account for 35%. Non-GPU chip demand is growing faster than GPU demand.

Huawei’s Ascend 910 series has emerged as the most deployed domestic option. The company committed over 10,000 engineers to address performance gaps through system engineering. In the first half of 2025, Huawei trained its Pangu Ultra model (135 billion parameters) and Pangu Ultra MoE model (718 billion parameters) entirely on Ascend chips.

Baidu brought online its first 10,000-card Kunlun P800 cluster in February 2025, with plans to scale to 30,000 cards. “Bringing online” means first successful power-on and basic system operation, indicating the design works and can proceed to testing. According to technical sources familiar with the product, precision alignment in typical training scenarios is no longer the primary obstacle after two years of iteration.

For inference, the picture looks more favorable. A technical team at a regional state-owned enterprise tested multiple domestic chips against NVIDIA’s H20 in late 2025. When running models specifically optimized for these chips, such as DeepSeek-R1 and Alibaba’s Qwen series, both Baidu’s Kunlun P800 and Alibaba’s PPU(T-Head Zhenwu) showed superior token throughput. This advantage comes with a caveat: adapting domestic chips to new models typically requires one to two months of optimization work.

Moore Threads and Metax, two domestic AI chip companies, went public in December 2025. Both hold roughly 1% market share. Both saw shares surge over 460% on their first day of trading, then retreat about 36% by late January – still leaving them roughly triple their IPO price.

The volatility tells a story. Both companies reported revenue growth exceeding 100% in 2025, but both remain unprofitable with losses in the billions of yuan. Moore Threads doesn’t expect to break even until 2027. Metax targets 2026 at the earliest. Investors are pricing in optionality on domestic chip adoption, not current fundamentals. The fact that valuations held after the initial euphoria faded suggests the bet is on a multi-year trajectory, not quarterly results.

The Training and Inference Split

A clear division has emerged. For inference workloads, domestic chips increasingly compete with NVIDIA’s performance-limited chips available in China. For training large foundation models, high-end NVIDIA chips remain essential.

One computer vision company’s experience illustrates the tradeoff. After training a small visual understanding model on an NVIDIA chip in 2025, the team replicated the process using Baidu’s Kunlun P800. They achieved the same results, but training took over 20% longer. Total costs ran significantly higher.

The US government’s stance remains stuck in bureaucratic limbo. Trump approved H200 exports to China in January 2026, but the chips cannot ship until the State Department, Defense Department, and Energy Department complete security reviews. Nearly two months later, Chinese customers are not placing orders. They’re waiting to see if licenses will actually arrive and what conditions will be attached.

The chip story is only one part of China’s compute equation. Whether efficiency can truly substitute for scale depends on three structural factors: energy economics, institutional friction, and hardware monetization. These explain why China’s approach might work for certain AI applications while remaining constrained in others.