Qwen 3.5 and the Margin Below

A strong open-source model at an aggressive price. The business logic lives one layer down.

On Chinese New Year’s Eve, while families across China were sitting down to reunion dinners, Alibaba released Qwen 3.5-Plus, its newest and most capable open-source AI model. The holiday timing was deliberate. A year ago, DeepSeek published its R1 reasoning model during the same pre-holiday window and generated global headlines, partly because Western newsrooms had fewer competing stories to run. Alibaba picked the same calendar slot.

The headline numbers are designed to impress: 397 billion total parameters with only 17 billion activated per inference call, thanks to a sparse mixture-of-experts (MoE) architecture that routes each input to a small subset of specialized sub-networks. Alibaba claims performance comparable to Google’s Gemini 3 Pro and OpenAI’s GPT-5.2 across a range of benchmarks, including knowledge reasoning, doctoral-level question answering, and agentic tasks. The model processes text and images natively, trained on mixed-modality data from the start rather than grafting vision capabilities onto a language model after the fact. It supports 201 languages. It handles video inputs up to two hours long.

These claims have not yet been independently verified. Every major model release in the past year has shipped with benchmark tables arranged to flatter the releasing company, and selective reporting is now an industry norm rather than an exception. The performance debate will play out over weeks. The price is worth examining now.

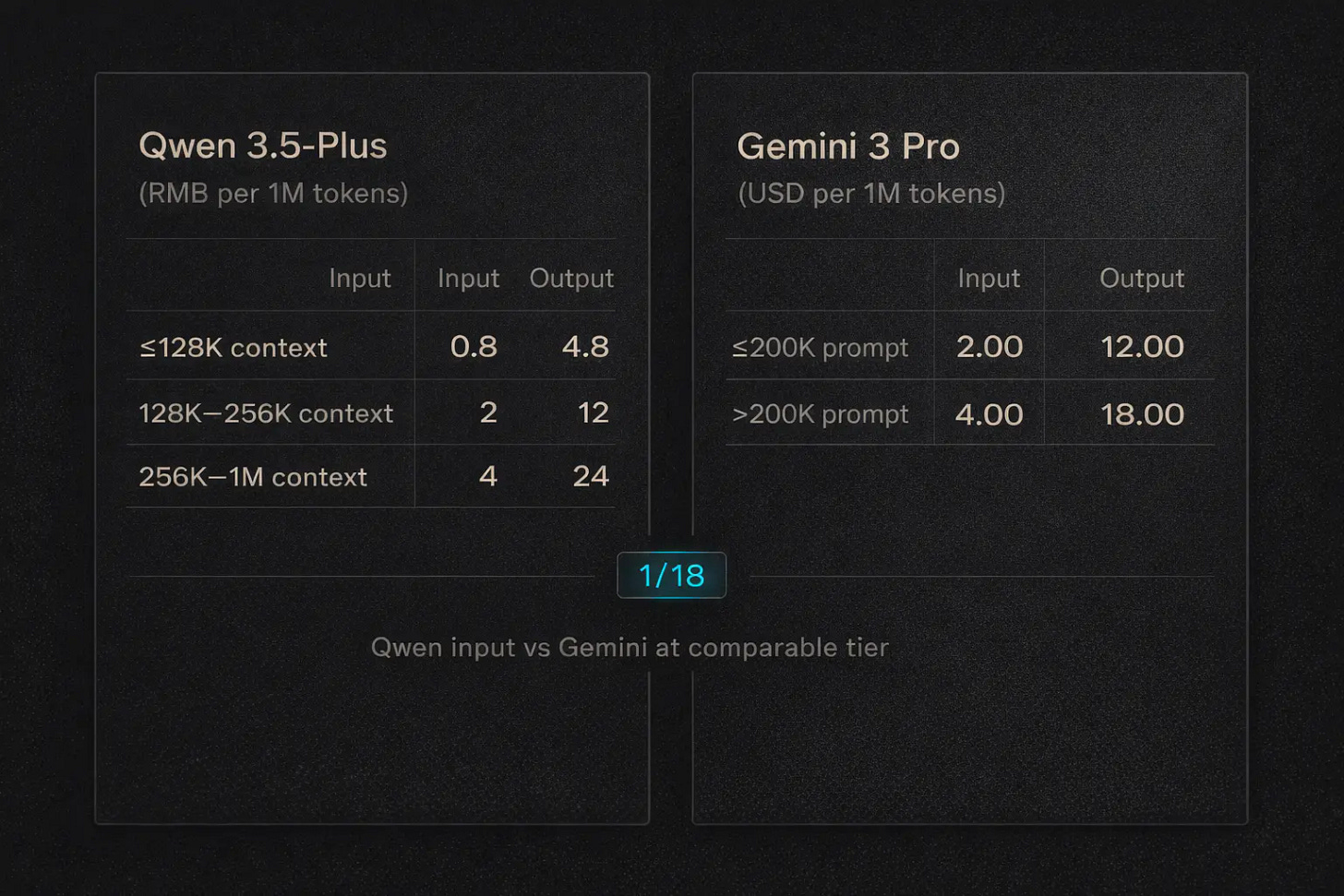

Alibaba set the API input price for Qwen 3.5-Plus at 0.8 yuan per million tokens, roughly $0.11, for requests up to 128,000 tokens. At that tier, a developer pays approximately one-eighteenth of what Gemini 3 Procharges for comparable input volume. The ratio narrows at longer context lengths but remains substantial across all tiers. Even allowing for differences in how the two companies define equivalent workloads, that is an extraordinarily aggressive price point for a model positioned alongside frontier offerings from Google and OpenAI.

Architectural efficiency does not fully explain the gap.

The Efficiency Argument and Its Limits

Four engineering choices underpin the low price. The sparse MoE design activates less than 5 percent of total parameters per inference call. A hybrid attention mechanism reduces computation on long contexts by letting the model allocate resources unevenly across input tokens, concentrating on passages that matter and skimming those that do not. Multi-token prediction allows the model to generate several tokens per forward pass rather than one at a time. And an attention gating mechanism, drawn from research that won the best paper award at NeurIPS 2025, stabilizes training at scale and improves output precision.

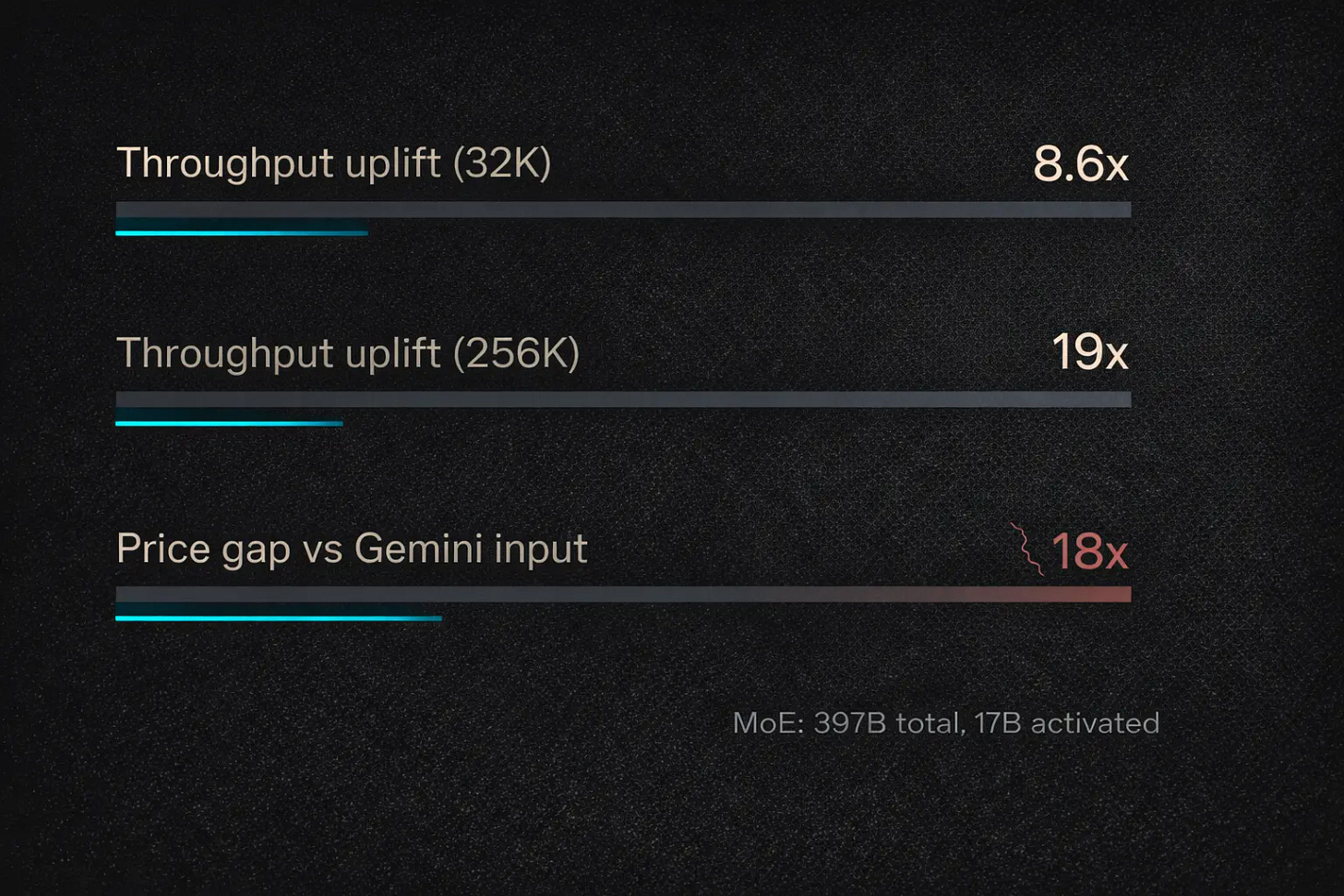

These are genuine technical contributions. Collectively, they are reported to enable inference throughput improvements of 8.6x at standard context lengths and up to 19x at 256,000 tokens, compared with the previous-generation Qwen 3-Max model.

But three things limit how far efficiency can explain the pricing.

First, the 8.6x throughput gain is measured against Alibaba’s own prior model. It says nothing about cost relative to Gemini 3 Pro, which runs on a different architecture, different hardware, and a different pricing structure. 8.6x improvement over yourself does not equal 18x advantage over a competitor.

Second, MoE architectures save compute but not memory. All 397 billion parameters must reside in GPU memory regardless of how many activate per call. The hardware bill for hosting the model does not shrink in proportion to the activation ratio.

Third, Alibaba serves this model through its own cloud, absorbing infrastructure, networking, and operational overhead on top of raw inference cost.

Add these together, and the gap between the efficiency gains and the 18x price advantage is too wide to attribute to architecture alone. The pricing almost certainly carries a subsidy component. The question is where that subsidy earns its return.