The Demand Layer: Understanding China's AI Market Through Tokens, Tenders and Pricing

How Thirty Trillion Daily Tokens, Public Procurement and Workflow Economics Are Building the World's Deepest AI Usage Foundation.

China consumed at least thirty trillion AI tokens yesterday. That is the most conservative reading of a single disclosure from ByteDance.

The company says its Doubao model alone now processes no fewer than thirty trillion tokens a day. That is one model, inside one company. If a single corporate stack is already burning compute at that scale, China’s true daily AI token consumption is much larger. Most of those tokens never show up in chatbots or casual experimentation. They are produced by content pipelines, moderation tools, government service platforms and software agents that operate around the clock.

This is the clearest sign that China’s AI market has entered a new phase. A token is the basic unit an AI model reads or generates, similar to a fragment of a word. A single email reply can produce hundreds of tokens. Once models sit inside workflows rather than isolated applications, token consumption grows at industrial speed.

Three forces now define China’s AI trajectory:

First is the surge in token throughput across consumer and enterprise systems.

Second is the expansion of institutional adoption through public tenders.

Third is the rapid evolution of pricing from discounts toward full workflow economics.

Together, these forces are constructing an AI demand engine on a national scale. The structure of this engine offers a view into how the world’s largest digital economy is absorbing frontier technology.

Token Growth at Infrastructure Scale

China’s token consumption has risen in a pattern that looks more like a surge in national bandwidth usage than conventional product adoption. Government statistics show daily token consumption climbing from roughly one hundred billion to thirty trillion in a period of about eighteen months. Consumer platforms account for a significant portion of this volume.

ByteDance’s Doubao model is embedded deeply across Douyin, the Chinese sibling of TikTok. It assists in video summarisation, content moderation, search ranking, comment analysis and advertising systems. These tasks run continuously and generate enormous token traffic. ByteDance reports that Doubao’s daily usage recently rose from around twelve hundred billion tokens to thirty trillion. Expansion at this scale becomes possible only when a model is positioned inside the core operating logic of a platform that reaches hundreds of millions of daily active users.

But consumer platforms tell only half the story. The other half comes from how developers are wiring models into continuous workflows. In the past year, coding oriented model subscriptions have emerged across the ecosystem. These plans encourage developers to use models as persistent components of their toolchains.

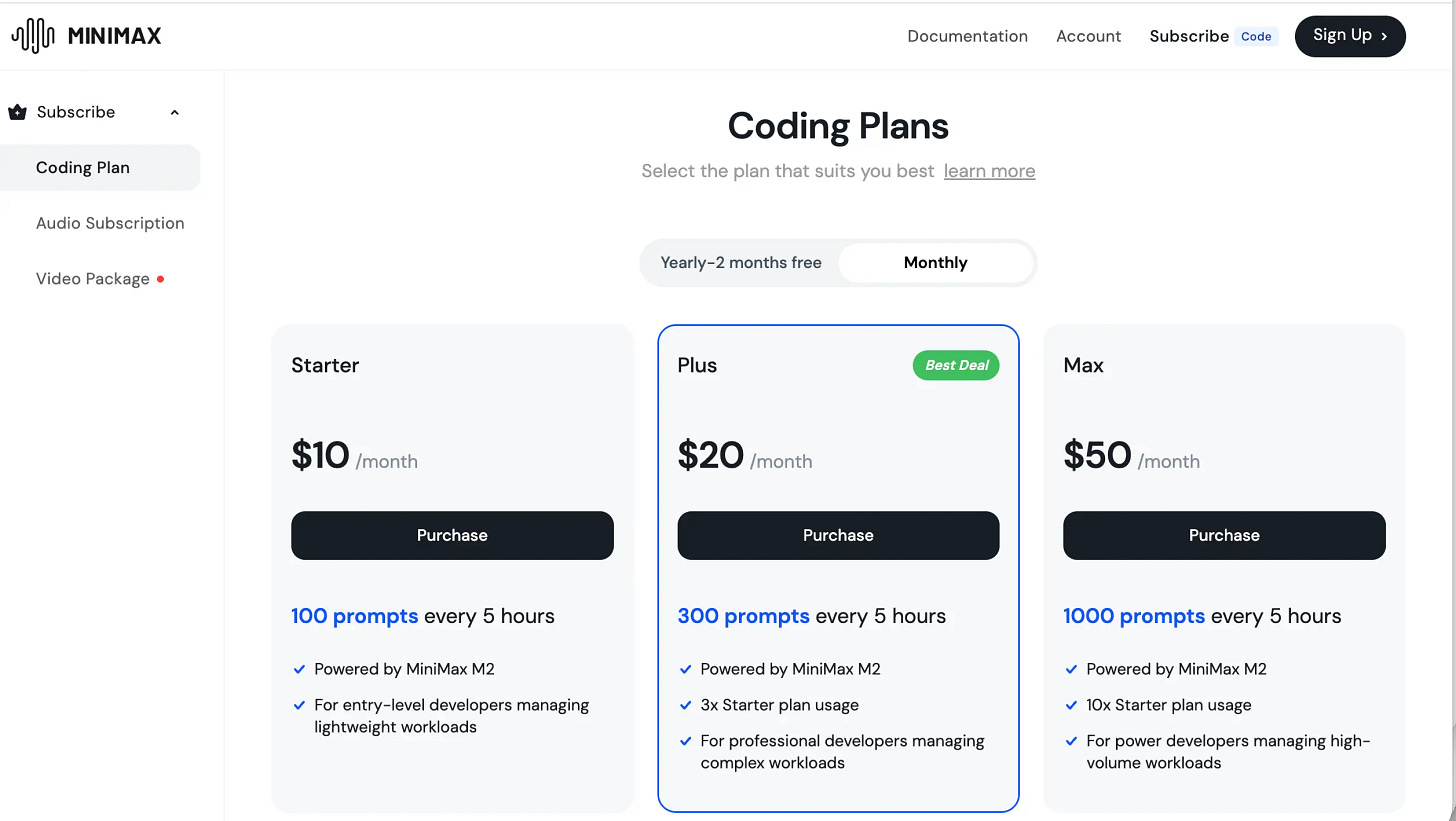

Coding agents are integrated directly into development environments, command line tools and continuous integration pipelines. Instead of responding to individual prompts, they participate in multi step tasks such as refactoring, debugging or scaffolding entire projects. Zhipu, ByteDance, Kimi and MiniMax have launched monthly plans that allow developers to issue a fixed number of requests every few hours. MiniMax’s M2 plan is one such example. It removes the need to monitor token expenditure on a granular scale and encourages developers to embed AI models into long running processes.

Once an agent becomes part of a workflow, usage grows continuously. Code generation, test creation, build automation and documentation tasks all consume tokens. The longer these workflows run, the more tokens they generate.

Consumer systems produce token volume through breadth. Enterprise and developer systems produce token volume through depth. Together they explain why China’s token economy behaves like infrastructure rather than user driven interaction. The numbers reflect sustained, embedded usage, not sporadic demand.

Institutional Adoption Through Public Tenders

The second force shaping China’s AI market is institutional adoption through public tenders. This mechanism is central to the country’s digital transformation. It is also one of the least understood dynamics outside China.

In the third quarter of 2025, more than two thousand AI related tenders were awarded nationwide with nearly ten billion yuan in disclosed contract value. Most of these projects were not about training large models or procuring computing hardware. Sixty one percent focused on applications. Education, government services and finance were the most active sectors. These categories involve heavy document processing, customer interaction and operational decision making. They are also significant consumers of tokens.

A recent national level project illustrates how this system works. China’s main online government service portal conducted a public tender for an AI powered customer service system. A state linked media and data company won the contract. The deployed model now guides citizens and businesses through service procedures, assists with document submission, routes tickets to the appropriate departments and manages follow up tasks. The assistant operates continuously and has become part of the daily workflow of a platform used by millions.

Similar developments can be seen at the city level. In an eastern city, the local public resources trading centre integrated a large model into its bidding platform. A virtual assistant provides round the clock support for companies preparing to participate in tenders. It explains registration procedures, helps interpret policies and resolves common issues. The centre is piloting additional features such as model based tender document checks and AI assisted scoring. Early tests indicate that document review times have dropped from hours to minutes.

Institutional adoption produces several structural effects. It generates scale because government portals, trading platforms and financial institutions handle thousands of interactions each day.

It creates stability because projects are funded through annual budget cycles and contracts often run for multiple years.

It supports domestic providers because many tenders include requirements related to data localisation, on premise deployment or compatibility with existing government systems.

China’s adoption curve is therefore built on formal procurement and operational commitment. This foundation differs from consumer driven adoption curves seen in other markets. It is anchored in public services and enterprise workflows rather than individual experimentation.

How Tokens and Tenders Reinforce Each Other

Token growth and public tenders are not separate stories. They form the two pillars of China’s AI demand engine.

Token throughput creates usage data. It reveals how models handle real tasks, where errors appear and how users interact with automated systems.

Public tenders create stable demand and long term integration. They ensure that AI systems are deployed in environments that run continuously and handle operational workloads.

Once these conditions exist, pricing becomes a tool for managing large scale usage rather than a promotional tactic. The pricing strategies that emerged in 2025 can be understood only through the lens of sustained token growth and expanding institutional adoption.

Pricing Moves Toward Workflow Economics

The third force shaping China’s AI market is pricing. The country experienced one of the fastest price collapses in the global model industry during 2024. Alibaba reduced prices on some long context models by more than ninety percent. ByteDance lowered the cost of several Doubao models across its lineup. Some base models across the ecosystem became free. This period reduced barriers to experimentation and accelerated onboarding.

In 2025, the market began to settle into recognisable tiers. At the lower end, free models supported wide distribution. Baidu’s ERNIE-Speed, ERNIE-Lite and parts of the ERNIE 4.5 family fall into this layer. They handle common tasks at essentially no cost, making them ideal for light enterprise workflows.

The next layer consists of low cost production models such as ERNIE 4.5 Turbo and earlier Qwen variants. These models are suited for high throughput applications with limited reasoning requirements.

The middle tier includes reasoning models designed for multi step cognitive tasks such as planning and complex evaluation. Baidu’s ERNIE X1 sits in this category. Its pricing is roughly half the cost of competing domestic reasoning models and significantly lower than Baidu’s earlier APIs. This makes it attractive for organisations seeking deeper cognitive capabilities without committing to premium pricing.

The top tier includes flagship models from Kimi, Zhipuand Alibaba. Their prices have converged around a common bandwidth. Input costs cluster within a narrow range and output costs settle at roughly two to three cents per thousand tokens. This convergence signals a stable premium segment.

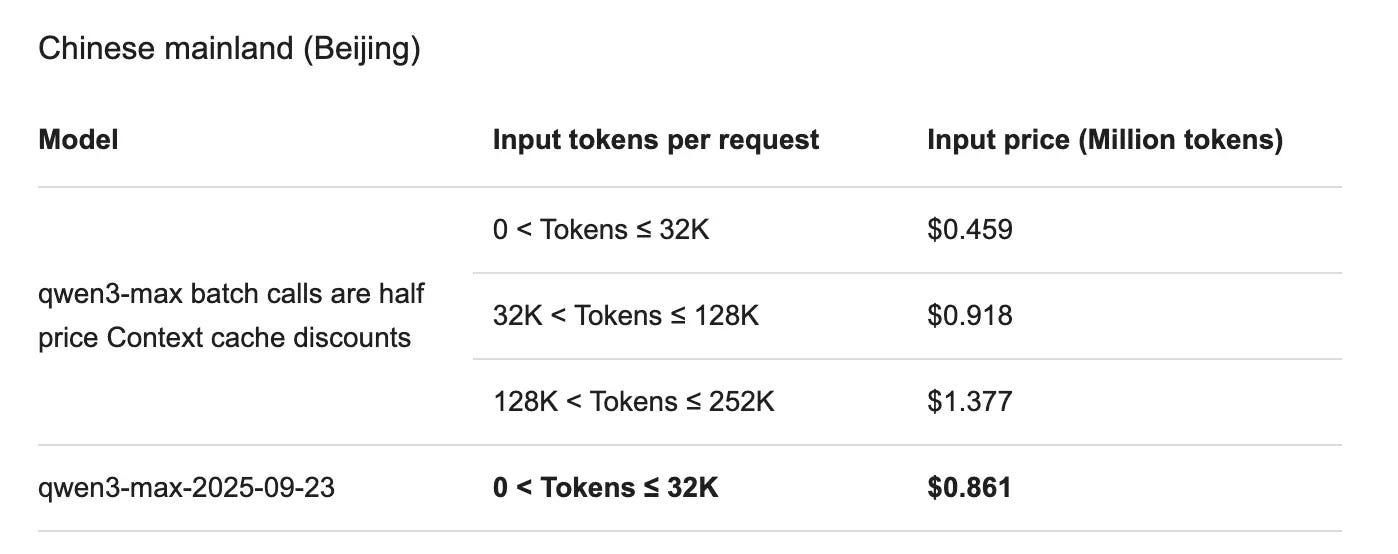

Yet price levels are only part of the story. A newer strategy has emerged that focuses on cost engineering for enterprise users. Alibaba’s Qwen 3-Max illustrates this shift. While its headline rates are lower than earlier versions for smaller requests, the most substantial savings come from workflow based optimisations:

Batch processing reduces costs for bulk tasks.

Implicit caching lowers the price of repeated inputs.

Explicit caching charges slightly more for creation but keeps subsequent calls at very low cost.

These features allow enterprises to redesign their workflows with cost in mind. For teams processing repetitive documents or structured prompts, caching can dramatically reduce expenses.

Baidu has taken a different approach with a structured product ladder. Free models sit at one end of the spectrum. ERNIE X1 occupies the middle tier with competitive reasoning capabilities. ERNIE 5.0 anchors the premium tier with performance and pricing aligned with other top Chinese models. This structure offers enterprises a clear pathway from experimentation to premium deployment.

Across the ecosystem, pricing is becoming an instrument for managing sustained usage. Providers align incentives around predictable costs. Enterprises integrate models into production workflows with confidence. The market is maturing into an industrial system where cost structures shape deployment strategies.

What China’s Demand Engine Means for Global Competition

The combination of tokens, tenders and pricing reveals a distinctive trajectory for China’s AI development. The country is generating one of the largest real world datasets of model interactions. These interactions reflect genuine task distribution, user behaviour and error patterns. They form a training base that grows organically as models continue to operate in production environments.

Institutional adoption ensures that AI deployment is not dependent on consumer cycles. Government agencies, public service portals and financial institutions incorporate models into daily operations. Once embedded, systems rarely revert to previous processes. This creates long term usage and drives deeper integration.

Pricing innovation shows that the market is entering a mature phase. Enterprises understand how to plan for token usage. Developers structure workflows around batch processing and caching. Model providers refine product ladders and segment their offerings. The market behaves more like a disciplined industry than an experimental field.

China’s AI economy is becoming an operating layer for both consumer and institutional systems. Token throughput is rising like a national utility. Tenders are turning AI into public infrastructure. Pricing is evolving to support long running workflows.

This combination is shaping one of the world’s most powerful AI demand engines. It influences how models are used, how systems are built and how value is created. The global narrative often centres on model benchmarks and chip restrictions, yet the deeper question is demand.

The future of AI competition may depend less on who builds the most advanced model and more on who builds the deepest, most stable foundation of usage. China is already several steps into that future.

The scale of thirty trillion daily tokens is remarkable. Your breakdown of how workflow economics drive adoption through public tenders maks a lot of sense, especially when you highlight that most tokens never show up in chatbots but in operational systms.

This is the part of the global AI race most people miss:

China isn’t optimizing for peak capability.

It’s optimizing for coherence across government workflows, enterprise pipelines, and mass-scale consumer platforms.

The West is building intelligence.

China is building an intelligence economy.